How Long to Run A/B Tests: the Known and Unknown

Paul Koch, Former Data & Analytics Director

Article Categories:

Posted on

Although split testing has become an essential part of any sound digital strategy, many people have misconceptions about the process -- especially the amount of time needed to get results. When relying solely on anecdotes from others, one can easily over- or under-estimate the time needed to reach a statistically sound conclusion.

In this post, I’ll visualize the factors that can affect test duration and explain approaches to getting faster results.

But, first, an anecdote! One of my favorite projects recently involved a series of more than 40 split tests over the course of three months. By testing changes on just one page, we improved revenue per session for viewers of that page by more than 50% -- a big improvement for a client that generates $12M+ in digital revenue each year through their site overall. Running about 5 tests per week, we’d meet with the client team at least once a week to discuss current results, learnings, and adaptations to the next week’s tests based on the current week’s findings. That weekly cycle worked well for this particular client; but, for other clients, it would be unnecessarily slow or unsustainably fast.

Several factors affect the time needed to reach conclusive tests:

- total visitors

- number of test variants

- the current conversion rate

- the expected conversion rate improvement (you’ll see that this is the X-factor)

Duration calculators, such as this one from VWO, are helpful -- but, they don’t help you visualize the range of test duration possibilities that could happen. You usually want to accumulate enough data to be between 90-99% confident that the test is not the result of random variation (in other words, it is statistically significant). For this post, we'll use 95%.

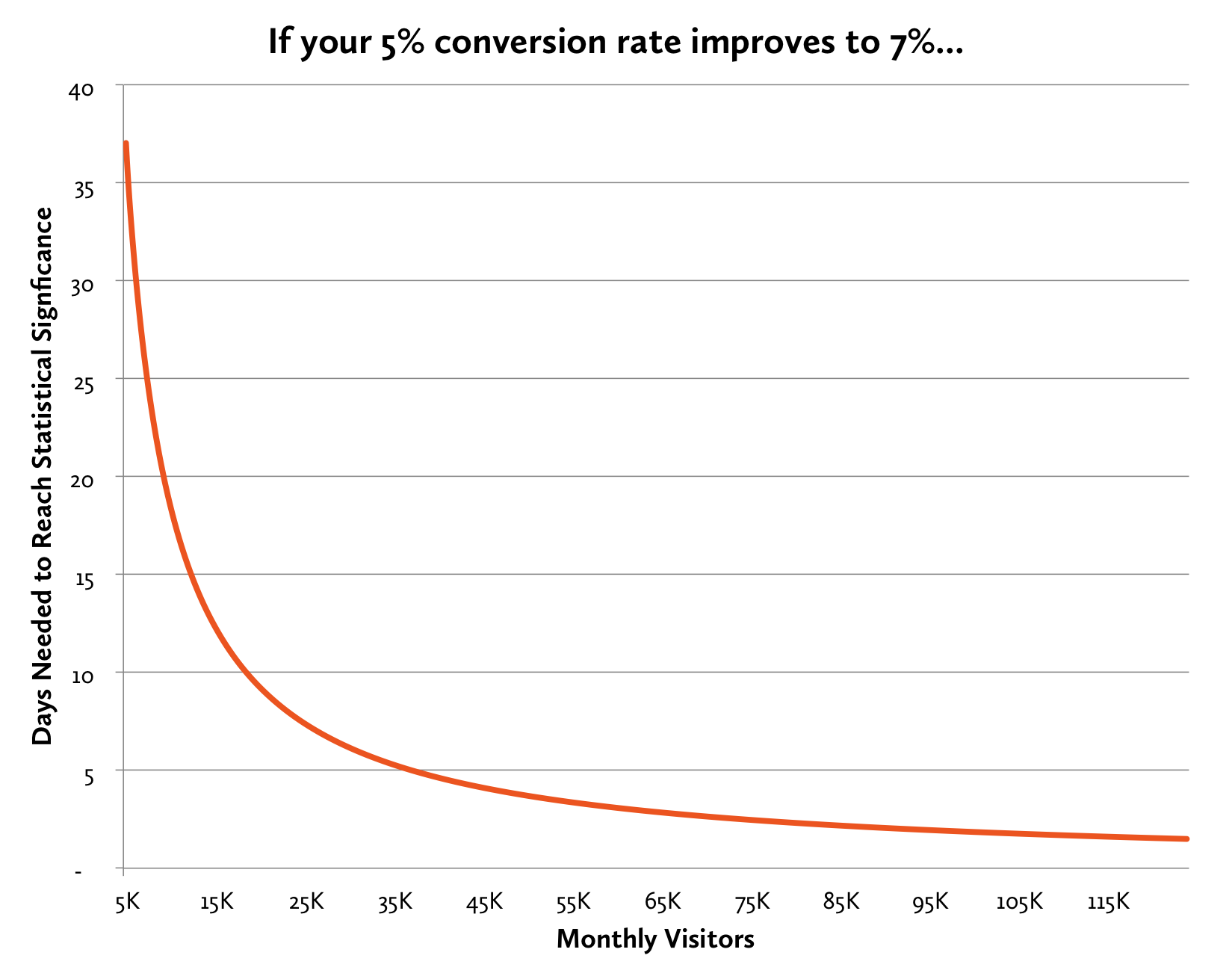

For example, consider a site with a 5% conversion rate. If a test version converts at 7%, here’s how many days you’ll need to reach 95% statistical significance, depending on the site traffic:

To get results in 7 days or fewer, you need at least 25,000 monthly visitors. With fewer visitors, the amount of time required notably increases.

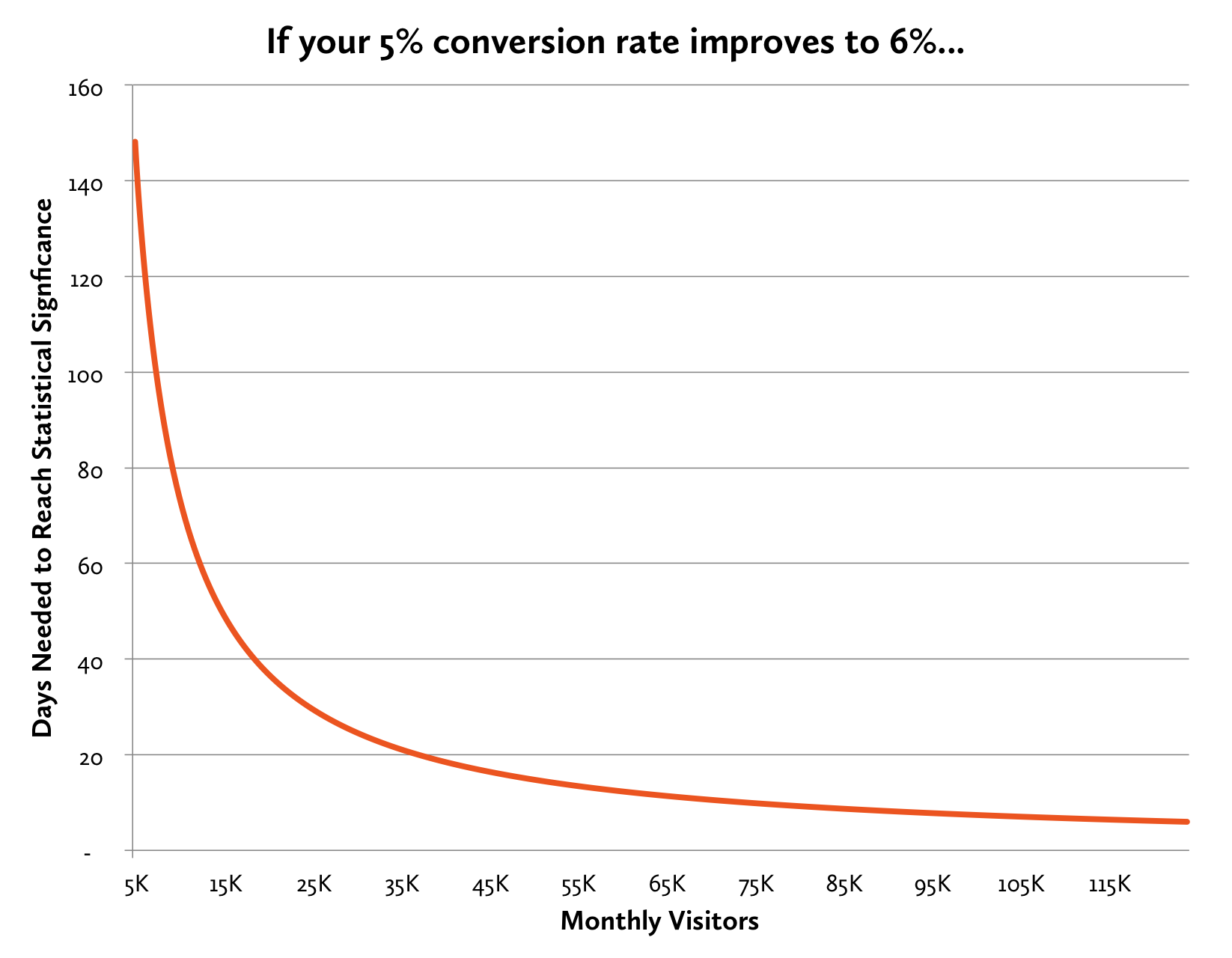

A conversion rate improvement from 5% to 7% is a 40% improvement in site effectiveness. It’s rare (though not impossible) to achieve this 40% improvement with a single test. What if the test led to a 20% improvement instead -- moving from a 5% conversion rate to 6%? Our duration changes drastically:

Now, to get results in 7 days, we need about 99,000 monthly visitors instead of 25,000. For a site with 25,000 visitors, the results reached in 7 days with a 7% conversion rate require 30 days with a 6% conversion rate. Here’s why, as I mentioned above, “expected conversion rate” is the X-factor: in this example, a single percentage point difference means that the test takes more than 4 times as long to reach conclusiveness.

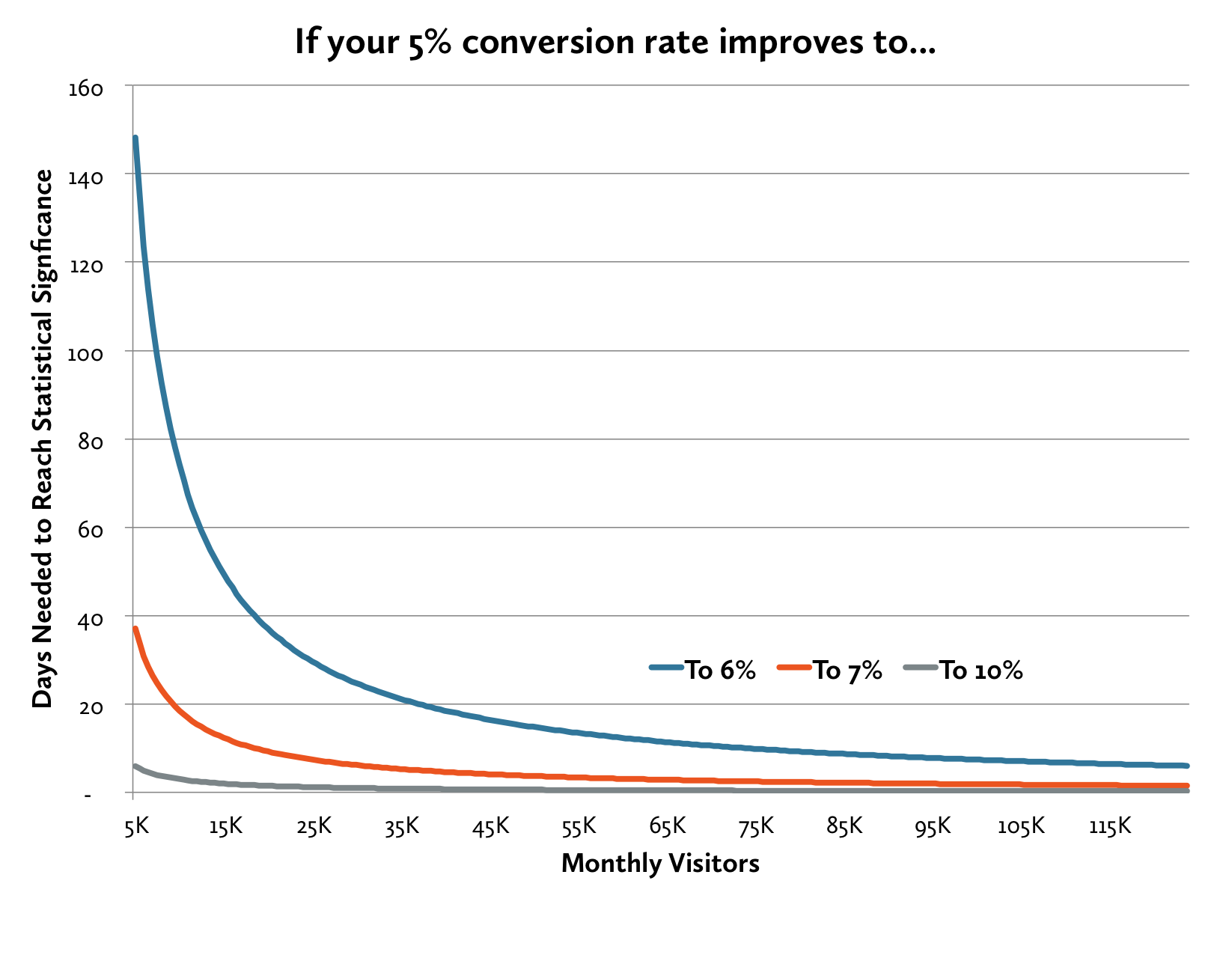

Just for fun, let’s see what happens if a test can double our conversion rate:

In this case, traffic levels don’t really matter at all. You can show statistical significance in 7 days with just 4,000 monthly visitors -- and, if you have 20,000 visitors or more, then you’ll get conclusive results in just a single day.

In this case, traffic levels don’t really matter at all. You can show statistical significance in 7 days with just 4,000 monthly visitors -- and, if you have 20,000 visitors or more, then you’ll get conclusive results in just a single day.

Now, let’s consider a different question: if your site is high-volume, how little of a conversion rate change will give you statistically significant results? For these high-traffic sites, even the tiniest improvement can mean big results -- for example, Google’s famous “shade of blue link test” nets the company $200 million in additional ad revenue every year. It’s common for people to underestimate the time needed in these high-volume instances.

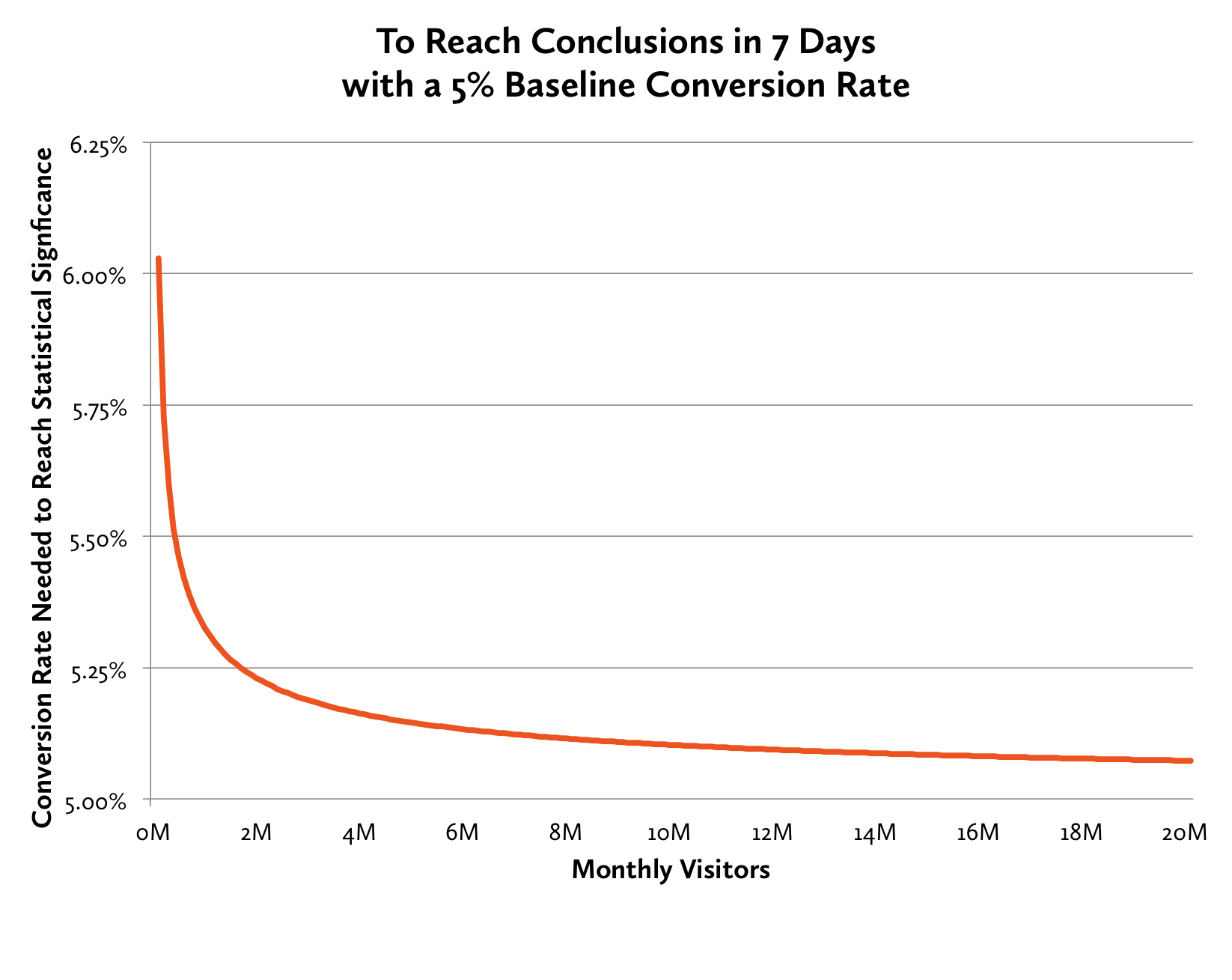

Assuming our 5% conversion rate baseline again, here’s the conversion rate we’d need to achieve to get results in 7 days, given different traffic levels:

If your site sees 10 million visitors per month, then your conversion rate only needs to change from 5.0% to 5.1% for you to be 95% confident in the results.

If your site sees 10 million visitors per month, then your conversion rate only needs to change from 5.0% to 5.1% for you to be 95% confident in the results.

To get results more quickly, consider these options:

- Test global elements, such as the navigation or site-wide page stylings. You’ll reach all your visitors, rather than only those who happen to reach a particular page.

- Test two versions at a time, instead of running multivariate tests. Stack the winner up against your next idea, and allow the winning version to continue forward. (See KV’s post on the topic here.) While this approach introduces seasonality factors into your testing, you can ultimately test your final winning version against the original version to understand the magnitude of improvement.

- Show your test to all your visitors, instead of just a subset of them.

- If your site gets relatively low traffic, make bold changes instead of tiny tweaks.

Of course, test duration is just one factor in an overall testing plan. We must also consider factors such as risk mitigation that may lead us to test only a percentage of visitors or to make less-sweeping changes. In your test planning, consider running sensitivity analyses with a calculator like VWO’s above: estimate the time you may need given several different conversion rate improvement scenarios.

Hope this info helps you set better upfront expectations with others in your organization -- happy testing!

Hat tip goes to VWO's Excel calculator, which I modified to generate the curves above.