Cross-platform Augmented Reality with Unity

Prayash Thapa, Former Developer

Article Categories:

Posted on

We recently shipped a mobile AR app for a geoscience museum. Here are a few lessons we learned along the way about building cross-platform AR apps in Unity.

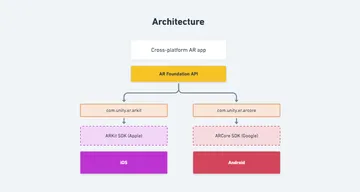

Unity: An Introduction #

Unity is a cross-platform game engine developed by Unity Technologies. It is one of the most popular tools out there for 2D and 3D game development. Over the years, Unity has gradually introduced a suite of tools to support 3D content creation, VFX, and filmmaking. Having embraced cross-platform development since its inception in 2006, Unity allowed developers to compile their apps to multiple targets, making it an excellent tool for building mobile games. Unity has worked closely with Apple and Google over the years to integrate the ARKit and ARCore SDKs into the its library. In 2018, Unity went public with a unifying API for both platforms with a package called AR Foundation.

AR Foundation #

AR Foundation is a library built by Unity to allow developers to build cross-platform augmented reality applications. Unity 2018.1 includes built-in multi-platform support for AR. These APIs are in the UnityEngine.Experimental.XR namespace, and consist of a number of Subsystems. These Subsystems comprise the low-level API for interacting with AR. The AR Foundation package wraps this low-level API into a cohesive whole and enhances it with additional utilities, such as AR session lifecycle management and the creation of GameObjects to represent detected features in the environment.

At a high level, AR Foundation is a set of MonoBehaviours (base class from which every Unity script derives) for dealing with devices that support the following features:

- Planar surface detection (horizontal + vertical)

- Point clouds, also known as feature points

- Reference points: an arbitrary position and orientation that the device tracks

- Lighting estimation for average color temperature and brightness in physical space

- World tracking: tracking the device's position and orientation in physical space

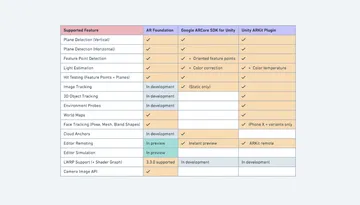

As this package is rapidly evolving alongside the AR SDKs by Apple and Google, we can expect that its API will also evolve to support the latest and greatest features. At the time of writing (July 2018), this chart is an accurate reflection of what's currently available:

This is quite the feature set! If you're just getting started with augmented reality development, AR Foundation should have you covered. Many of these pieces will be completed by the end of 2019. Check out this Unite LA 2018 talk on Rendering techniques for augmented reality and a look ahead at AR foundation if you're stoked on getting a preview of all the exciting stuff the Unity team is working on for AR developers.

User Experience #

During development, we ran into several hurdles pertaining to the general UX of the app. Here are some high level ideas about things to keep in mind during design and development.

Responsive UI #

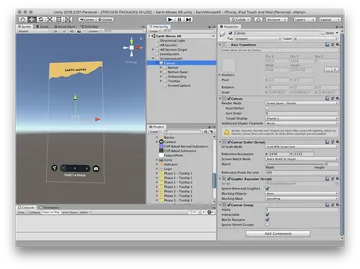

Setting up a responsive UI canvas takes some legwork, but tackling it first will prevent headaches later on. In order to make a flat 2D UI on top of the AR camera, you'll need to add a Canvas GameObject to your Scene Hierarchy. The following settings in your Canvas Scaler component should be all you need. Make sure you're using the Anchors setting in the Rect Transform component of your UI elements to position them inside of the Canvas. Consult the Unity Layout Manual for more information on this.

AR Onboarding #

Generally, onboarding new users onto an AR app a big ask – we're asking the user to:

- Download an app of considerable size from the App Store/Play Store, probably on a mobile connection. AR apps typically package in bulky SDKs, 3D models, and other asset files, so this could be a big bundle.

- Give permission to access the device's camera. If the user accidentally denies permission, they have to go through additional steps of approving manually. iOS will not display the alert again, so the user is forced to go into the Settings app to change this. Android is a little more forgiving and will allow you to ask multiple times in-app.

- Dive into the experience. The user might not have intuition about what to do. They might not even know what AR is. How do we make this experience frictionless straight off the bat?

In order to ensure that this entire process goes smoothly, it's worth thinking about how you might design the onboarding experience, because it's something that can make or break the app. Typically, when they start the app, users need to know what they have to do to get to the experience with minimal oversight. It's common to have some sort of animation to tell the user to calibrate the camera to their surroundings as this is what AR SDKs require before anything else can happen.

A typical flow for an AR app that allows the user to project a 3D model in world space might look like:

Consider creating a UIManager component attached to your UI Canvas which orchestrates the onboarding flow. You can use Unity's native Animator objects to toggle different onboarding animations and guide your user. Here's an example script that starts off the app with a barebones UI, fades between the different onboarding animations, and reveals the app UI once a model has been placed.

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.XR.ARFoundation;

public class UIManager : MonoBehaviour {

const string FadeOffAnim = "FadeOff";

const string FadeOnAnim = "FadeOn";

/// <summary>

/// The PlaneManager which we'll hook into to determine

/// what instruction we should be displaying.

/// </summary>

public ARPlaneManager planeManager;

public GameObject appUI;

public Animator moveDeviceAnimation;

public Animator tapToPlaceAnimation;

private static List<ARPlane> _planes = new List<ARPlane>();

private bool _showingTapToPlace = false;

private bool _showingMoveDevice = true;

private void Start() {

// Initially, the application UI should be hidden until a model is placed

appUI.GetComponent<CanvasGroup>().alpha = 0.0f;

}

void OnEnable() {

ARSubsystemManager.cameraFrameReceived += FrameChanged;

}

void OnDisable() {

ARSubsystemManager.cameraFrameReceived -= FrameChanged;

}

void FrameChanged(ARCameraFrameEventArgs args) {

// Listen to changes every frame and guide the User

// to calibrate the app to their current scene.

// This includes telling them when to scan, and

// when the scene is ready for placing a model.

if (PlanesFound() && _showingMoveDevice) {

// Once horizontal planes are detected, we'll switch to a different

// anim to tell the User to place the model

moveDeviceAnimation.SetTrigger(FadeOffAnim);

tapToPlaceAnimation.SetTrigger(FadeOnAnim);

_showingTapToPlace = true;

_showingMoveDevice = false;

}

}

bool PlanesFound() {

if (planeManager == null) { return false; }

planeManager.GetAllPlanes(_planes);

return _planes.Count > 0;

}

void PlacedObject() {

// Fade the App UI in

StartCoroutine(Helpers.Fade(appUI.GetComponent<CanvasGroup>(), 1.0f, 0.3f));

if (_showingTapToPlace) {

tapToPlaceAnimation.SetTrigger(FadeOffAnim);

_showingTapToPlace = false;

}

}

}Don't forget! Your UIManager object needs to be hooked up to a few things via the Unity Inspector. These will ideally be your AR Session Origin, your UI canvas, and any onboarding animations that you've created inside of Unity.

Making the user aware of what's happening #

There is quite a bit of computer vision magic happening in the background when it comes to scanning and calibration, and users most likely won't be aware of the mechanism behind the tracking work the SDK is doing. AR Foundation's a built-in state machine ARSubsystemManager exposes critical information about the active AR session, it is advised to subscribe to the ARSubsystemManager.systemStateChanged callback in order to let the user know what it is doing. In order to make the user aware of what's happening, we should let them know if the app is unable to track properly, whether that be due to featureless surfaces or suboptimal lighting, or whether their device needs to install additional software.

Mission-critical performance #

Processing power is limited on mobile, especially if you plan on deploying an app that is targeting the entire spectrum of Android and iOS devices on the market. The App Store and Play Store prevent the installation of AR apps unless the user's device meets the system requirements, but it's still good to test apps on as wide of a performance spectrum as possible. Because performance is critical in AR apps, it's important to benchmark in tandem with development.

- As the rulebook says, anything below 30fps is going to severely degrade the experience. Unity provides handy tools to profile your apps, but one of the easiest ways to measure your performance is to add an FPS counter to your app during development. Try stress testing your app and figuring out when the FPS drops significantly to figure out where the critical points are.

- Make use of Unity's Prefabs system. Constructing meshes in realtime is processing intensive, and for this reason, Unity introduced the Prefab system, allowing you to instantiate them at runtime at a very low cost.

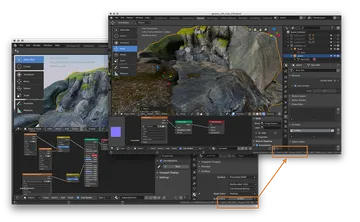

- If you're using 3D models in your app, ensure that textures are as small as possible and that they are compressed. Try to bake lighting and texture maps into a single map. Blender is a great tool for baking such maps all into one. Compress textures inside of Unity until fidelity loss is drastic.

- The vertex count of your model also makes a huge difference, so consider retopologizing your meshes to lower the number of vertices as much as possible. High vertex count can cause a lot of load on mobile GPUs, causing phones to overheat and lose battery very quickly. The Decimate Modifier in Blender is a quick and easy way to reduce the polygon count of your mesh.

- Unity comes with a plethora of built in shaders available at your disposal, but they should be approached with some discretion, especially for mobile applications. Avoid using expensive shaders at all cost. In our case, we were using a refractive shader for rendering the plunge pool of the waterfall, and it caused even high end phones to heat up very quickly. Striving for realism at the cost of user experience is not a sensible trade-off, so finding some sort of compromise here is a good call, even if the art direction suffers. Refer to the Shader Performance docs by Unity if you're trying to decide what shaders to use.

Leveraging animations #

Due to the tangible nature of AR, objects instanteneously summoning into the view are much more jarring than traditional 2D UI elements. Take care in easing them into the screen, whether that be some sort of transform or fade. In Unity, you can achieve this by leveraging StartCoroutine and Mathf.Lerp. Other options for easing are also available inside of Unity, but linear interpolation is a decent starting point.

For example, here's how we might fade the material of a GameObject inside of Unity. Make sure your model material's rendering mode is set to Transparent mode and not Opaque.

public static class Helpers {

public static IEnumerator FadeTo(Material material, float targetOpacity, float duration) {

// Cache the current color of the material, and its initial opacity.

Color color = material.color;

float startOpacity = color.a;

// Track how many seconds we've been fading.

float t = 0;

while (t < duration) {

// Step the fade forward one frame.

t += Time.deltaTime;

// Turn the time into an interpolation factor between 0 and 1.

float blend = Mathf.Clamp01(t / duration);

// Blend to the corresponding opacity between start & target.

color.a = Mathf.Lerp(startOpacity, targetOpacity, blend);

// Apply the resulting color to the material.

material.color = color;

// Wait one frame, and repeat.

yield return null;

}

}

}

// A 1 second fade-in for a GameObject with an initial opacity of 0

public void FadeOut() {

StartCoroutine(Fade(gameObject.material, 1.0f, 1.0f));

}Development workflow #

Development in Unity is smooth, but it's quite likely you will run into some issues working with an experimental API like AR Foundation. It's best to integrate Git and Git LFS into your workflow right away. We ran into many issues where Unity would crash unexpectedly (especially during remoting).

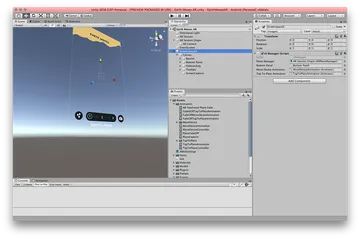

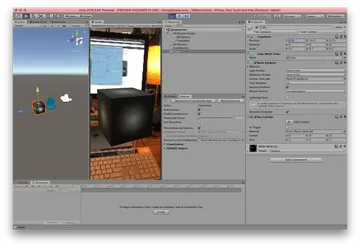

Streaming Sensor Data to Unity via XR Remote #

AR apps require constant iteration, which means you'll be building onto your device and testing all the time. To make this process as streamlined as possible, Unity has a remoting tool you can use to stream the sensor data from your device via USB or local network into your Unity session, allowing you to test your app without actually deploying the app onto your device, which is a process that takes several minutes for every build. This could cripple development, so it is essential to get the remoting tool working for your project. Refer to this excellent video by Satwant Singh which guides you through installation of the remoting tool. Be warned that this is an experimental tool, so your mileage may vary.

Building to Device #

Building onto your device should be a straightforward process, though it does take a few minutes per build. You may run into the black screen problem, or have trouble exporting an Android project from Unity. In cases like these, make sure you don't have conflicting packages installed. The black screen problem is a notoriously elusive, so if you run into this issue, do consult this GitHub thread for possible solutions.

We found that building and running from Unity directly on an Android device is much faster than generating an Xcode project and building onto an iOS device. If that doesn't work, you can generate an APK file from Unity's Build Settings and use adb (Android Debugging Tool) to push the APK file onto your device manually. You'll need to have USB debugging enabled on the Android device in order to do this:

# List out all connected Android devices and grab the serial number

$ adb devices

# Push the .apk file onto your device using the serial number from above

adb -s <serial-number> install ~/Desktop/<your-app-name>.apkFin #

Hopefully this guide makes navigating AR development in Unity just a tad easier. There is still a lot of to be discussed, and the tools will only get better with time. If you've got any tips to share on AR development with Unity, feel free to leave a comment below or tweet me @_prayash! If you've got an AR app you would like to prototype or talk about, we'd love to chat!