Chatbot 101: Alexa, Do I Need a Chatbot?

Amanda Cavanaugh, Former Senior Product Designer

Article Categories:

Posted on

"If I could rearrange the alphabet, I'd put U and I together." - Google Assistant, when asked to decide your chatbot strategy for next quarter.

A long, long time ago, I worked on an Alexa Skill. During usability testing, one user had asked a question to Alexa regarding CD bank account rates and, after thinking for a moment with her ever-revolving blue indicator, Alexa responded, “Sure, I’ll play you something.” Alexa paused, then from that little black disc on the desk came the unmistakable melodies of Garth Brooks.

We were flabbergasted. How did CD rates end up Play Garth Brooks?

After the test, the UX group reconvened and rewatched that moment in our recording over and over. How did that happen? Nowhere in our utterances did we account for that input, and we absolutely had no intention of Garth Brooks ever playing a starring role in our Skill.

Well, we said, that’s AI, baby - where everything looks like magic but it’s really just a complicated web of conversation maps, mathematics, probabilities, and maybe a little bit of ✨ magic ✨.

Chatbots are a part of this magic. You type something in, or make a selection from a collection of buttons, and the chatbot responds. Sometimes, the response is accurate and helpful. Sometimes, it’s completely off the wall - Garth Brooks off the wall. Often, it’s a simple “Sorry, I don’t know that”, followed by a prompt redirect to an actual human. So, when you’re tasked with determining if you need a chatbot, because an executive or director told you that it would improve your product, it might be worth asking what exactly is being asked of that chatbot: math or magic?

There are many flavors of chatbots, but they can generally be described by asking two questions.

What is its purpose?

What is its brain?

As for the purpose of a chatbot, they generally fall into three categories:

1. Task-Based Bots

Task-based chatbots can help automate processes and reduce human hours. You may be familiar with some examples - you can ask Alexa or your Google Assistant to set up alarms, add or remove calendar events, play music, confirm your dog can eat that blueberry off the floor, you name it. These types of chatbots are often employed to reduce operational cost without high training or maintenance cost. Having a bot schedule meetings, book PTO, find flights, or remind employees to submit time sheets can save time and money in the long run.

Hey Google, can dogs eat cucumbers?

Um, quickly please.

2. Chit-chat Bots

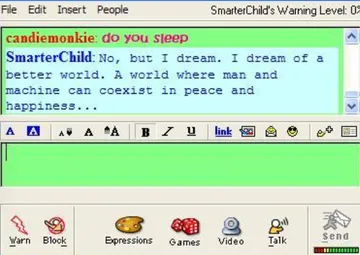

Bots built on intelligent natural language processors can serve as a neutral party for people to chat with your brand, whether or not it results in a conversion, though your mileage may vary on how good the conversation is. When you ask Alexa to tell you a joke, or *throwback alert* chatted with SmarterChild on AIM back in the day, you were being entertained by - wait for it - math. Woebot, headed by Andrew Ng of Google and Baidu reputation, is another conversational interface that provides automated cognitive behavioral therapy, all in the form of a chatbot. Woebot is not task-based and not a diagnostic tool or FAQ-oriented experience - it’s just there to listen, learn, and be a support system.

3. Help Bots

Chatbots are often used to provide access to FAQ answers and other information that shouldn’t require a human response but may be a little more complicated to find based on your website or app’s structure. These chatbots can be closed-domain or open-domain. Closed-domain bots answer questions specific to your industry or subject matter, while open-domain bots can and should be able to provide answers to pretty much any question or scenario presented to them.

You’ll find closed-domain chatbots most often on websites and in apps that require an industry-specific vocabulary. Bank of America’s Erica assistant is an example, trained to answer questions specific to finance, banking, and your accounts with that institution. Planned Parenthood’s Roo, and AARP’s Avo are also examples of closed-domain conversational interfaces.

(We actually built Avo here at Viget. You can see how we did it in our case study.)

Google Assistant, Amazon’s Alexa, and Apple’s Siri are excellent examples of open-domain experiences. They are more or less a search engine for the entire internet, plus whatever apps you have connected to them. The assistant-style bots can also complete tasks and sometimes even hold somewhat intelligible conversations. Google Assistant can even screen phone calls for you to determine whether or not you should answer.

Chatbot brains are another matter. I’m not going to pretend I’m an AI / NLP / NLU expert but the following should give you a gist of how these types of bot-brains work.

Brain 1: Menu-Based Bots

Menu- or button-based bots use a fairly simple 1:1 input-output model for communicating information to the user. The user is presented with a menu of options, often presented as buttons or as specific phrases that the user selects or inputs. Matched to each of these phrases is an output, which may be text-based or another set of buttons. Complex versions of these bots can rely on decision-tree logic to determine which response is given based on the input, but the bot’s brain has minimal, if any, predictive capacity. These bots are often easily organized, small in scope or domain, and don’t rely on natural language processing, as there is no free form input from users that can’t be handled with a canned “I’m sorry, I don’t know the answer to that” response and another menu.

When freeform inputs get brought into the mix, bot brains get increasingly more complicated.

Brain 2: Language-Based Bots

While menu-based or button-based bots use structured input (meaning, the structure of the inputs and their output is pre-defined), language-based bots can handle inputs that aren’t pre-defined and aren’t 100% matched to an output. Language-based bots that can interpret unstructured input, like freeform text or speech, use what’s called natural language processing to understand what that text says.

Natural language processing (NLP) is a fairly broad term that is often used to encompass a part of artificial intelligence that includes interpreting what text literally says and the context that gives that text its meaning. At its core, NLP takes unstructured text and gives it structure, by way of finding keywords and relationships between those keywords. Then, the NLP tries to predict the best response based on those keywords’ closest-matching definitions. The predetermined definitions usually come from vast data sets, and the NLP is often trained endlessly on understanding the relationships between keywords and their definitions.

This predictive model might be familiar to you if you watched IBM Watson on Jeopardy! many years ago. When Watson would provide an answer, the screen would also show Watson’s confidence that it had the correct response. Similarly, when you input unstructured text into a chatbot using an NLP, the NLP algorithm takes that text and makes its best guess for what your intent was. Based on what it thinks you intend to find out, it then responds and sometimes will ask, “Was that right?” so it can continue to improve those probabilities.

Where NLP seeks to understand what the words say, natural language understanding seeks to understand what your words mean.

Natural language understanding (NLU) seeks to apply context to the user’s literal text. Sentiment, sarcasm, colloquialisms, misspellings or slang can change the meaning of text and have influence on the appropriate response.

Not all language-based bots use NLU, or may only be operating from a narrow set of definitions or vocabulary. Here’s a simple example to illustrate how a decision-tree model can be used to provide responses to unstructured input without a sophisticated NLU.

Decision-tree Logic

Let’s say a user finds a sports chatbot and is feeling like they want to be sad that day, so they input, “Pittsburgh Pirates season record.”

The first thing the bot must do is identify the user’s intent. Intents are at their core the goal the user wants to achieve in asking the question or hitting a button in a menu. NLPs use what’s called name-entity recognition (NER) to parse meaningful keywords from unstructured text. Once keywords are identified, they are then run against the definition list. There is a lot of math and training involved in this part of the process that is out of scope for this article, but you can find some great blog posts in the resource section at the end.

This bot is going to look at the user’s input and identify the keywords: [Pittsburgh Pirates] [season] [record]. A bot trained on these definitions should be able to understand what the user is asking for here: How many wins and losses do the Pittsburgh Pirates have?

There are a few unknowns here that the bot might not be able to manage - which season is the user referring to? If it’s the offseason right now, can we assume last season? If a season is ongoing, can we assume this season? These unknowns reduce the bot’s confidence in its response.

This bot should be able to respond with a straightforward answer to this question. At the end of the 2021 baseball season, the Pittsburgh Pirates have 61 wins and 101 losses. If the user clarified their intent by asking, “What’s the Pittsburgh Pirates’ record at home?” or “Pittsburgh Pirates 2019 home record” or “Pittsburgh Pirates last 10 games”, the bot could use those modifiers to provide a different answer. Keywords that modify the user’s intent are called entities. Entities can be used in an if/and/or logic structure to match an input with a response.

The decision tree here works on a boolean system:

If Pittsburgh Pirates and record, then current record.

If Pittsburgh Pirates and record and home, then current at home record only.

If Pittsburgh Pirates and record or standings and home, then current at home record only, but use the word standings if that’s what the user input. Standings and record here share the same definition and are thus interchangeable for this bot.

If Pittsburgh Pirates and record and home and 2019, then 2019 home record only.

And so on and so on until you are too sad to continue.

These logic trees can get very, very complicated, depending on the number of modifiers being accommodated, but don’t necessitate using natural language understanding so long as the user follows a fairly simple keyword-based conversational style.

Natural Language Understanding

Where language-based bots get more exciting is when natural language understanding engines get brought into the fold. Bots that use both natural language understanding (provided by services like IBM Watson, Amazon Lex, Google’s Dialogflow, or Facebook’s Wit) can understand both what the user says (via identifying keywords that are defined in the bot’s vocabulary), and what the user means (by analyzing the context of the input, either by combining those keywords into more meaningful intents or even analyzing the input through a tone or sentiment analyzer).

Here’s an example of where NLU is a valuable and worthwhile addition to your NLP service, with the same sports bot.

A user asks, “What are the Buccos up to?”

A basic, keyword-based chatbot will go through this input and identify the following keywords:

What, Buccos, up, to. The bot may be trained on team nicknames like Buccos, but up to might trip up the bot, as “to” is missing its associated noun or pronoun (“this season” “this week” “this series” “these last ten games”) and the user is not referencing the direction up.

Natural language understanding helps us identify what the actual intent is. Buccos is a nickname for the Pittsburgh Pirates, up to is asking the status or state of the subject Buccos, so this user is likely asking what the Pittsburgh Pirates’ record is. We can perhaps guess that this user is asking about a more recent spate of games, but the time frame is unclear.

So, the bot responds with maybe 70% confidence in its answer:

The Pittsburgh Pirates finished the 2021 season in last place in the NL East with 61 wins and 101 losses. What else would you like to know?

And now we're back to being sad.

Adding a Chatbot to your Communication Strategy

Depending on what you want your chatbot to do, or how you want it to be powered, a chatbot might be a valuable addition to your communication strategy. Well-built and well-structured chatbots can help reduce operational costs overtime, reduce human hours needed to complete automatable tasks, and reduce call center volume.

Chatbots can also provide an important brand moment for your product or service. But they don’t come without cost to your entire organization, even for your UX team. Aside from the overarching UX cost of content strategy, conversational design, brand personality work, and continued UX maintenance, good chatbots are usually also built on pre-existing NLP algorithms. These can get expensive, especially if you’re investing in a custom or domain-specific NLP.

Chatbots also require a significant amount of training and maintenance as their scope expands, interactions with it increase, data sets grow, and gaps are identified. You’ll likely need to staff several team members dedicated solely to an out of the box bot, and many more for a custom bot. You’ll need UX practitioners - conversational designers, brand strategists, information architects, and perhaps a visual designer here or there. You’ll need data scientists to train your NLP and engineers to implement your bot on your platform. You’ll also need testers to ensure your training regimen is working and to catch any bugs before release. Your bot will need to be designed, built, trained, tested, revised, trained, and tested again, often for its lifetime.

With the basics of what chatbots can do and how their brains work out of the way, stay tuned for part two, where we’ll go through UX content strategy and conversational design best practices for chatbots.

Resources and Further Reading

A Simple Introduction to Natural Language Processing

Chatbot Categories and Their Limitations

Computer, respond to this email.

Deep Learning for Chatbots - Part 1

Natural Language Understanding for Task Oriented Chatbots

NLP vs NLU: What’s The Difference?