Benchmark Your Unmoderated User Testing with Nagg

Curt Arledge, Former Director of UX Strategy

Article Categories:

Posted on

How to automatically split your research participants between multiple studies.

Unmoderated user testing is an important tool in any user researcher’s toolkit. At Viget, we often use Optimal Workshop’s unmoderated tree-testing tool, Treejack, to make sure that users can find what they’re looking for in a website’s navigation menu. In this article, I’ll be talking specifically about Treejack, but you can substitute in the unmoderated testing tool of your choice.

There are two basic ways to use Treejack: to evaluate the labeling system of an existing site, or to evaluate a new, proposed labeling system. But the most powerful way to use Treejack is to do both at once. That way, we can not only identify problems with the existing information architecture, we can see if our proposed redesign actually solves those problems. The existing tree acts as a benchmark against which we can compare our new tree.

Optimal Workshop doesn’t currently provide a way to test more than one tree in a single study or to split participants randomly between two studies, though they do suggest some sample Javascript for randomizing a link destination between two or more study URLs. But if you’re recruiting via email or social media, you’ll need a way to handle that destination-splitting without front-end code. That’s where nagg comes in.

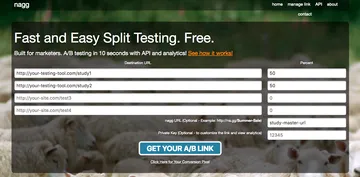

Nagg (na.gg) is a simple utility that generates a custom nagg URL that splits traffic between up to four URLs at specified percentages. For the purposes I’m describing, you would enter two URLs at 50% to distribute traffic evenly. Nagg also lets you view a breakdown of link traffic by time, country, browser, and more.

The destination URLs you’ll enter should be for separate Treejack studies, one with the existing tree and one with your proposed new tree. Both studies should use the exact same tasks, so that you can accurately compare the results of each study. Optimal Workshop makes all of this easy by letting you duplicate studies and import/export trees from/to a spreadsheet. This is extra helpful when there are a lot of tasks or very large trees.

This isn’t A/B testing per se, since participants know they’re taking a test, rather than being observed without their knowledge. As such, your test design is still susceptible to bias, so you should follow Treejack best practices like randomizing tasks and avoiding using target terms in your task prompts.

Automatic link destination-splitting with Treejack and nagg is a missing piece of the puzzle that allows you to benchmark your new labeling system against the one that already exists. Regardless of whether your unmoderated test is Treejack or something else, you can use nagg to easily test against a benchmark when evaluating a new design.

Hat tip to Paul, who pointed me to nagg.