Apple's Future Vision

A UX Strategist's take on adapting to the mixed reality future of visionOS.

As someone who enjoys keeping tabs on the latest tech and product releases, I am intrigued to learn more about Apple’s take on virtual reality. Or as they call it, spatial computing. While others have occupied this space for a while (notably Meta with Quest), the bulk of use has been concentrated on video games. While VR gaming can be fun, especially if you are not prone to VR sickness, I find it interesting to contemplate the potential beyond that.

The current landscape of devices spans virtual reality, augmented reality, and mixed reality.

- Virtual reality: A fully immersed experience that has no traces of your physical surroundings.

- Augmented reality: Overlaying or inserting virtual content with your physical surroundings visible and part of the experience.

- Mixed reality: Blending both virtual and physical experiences into one.

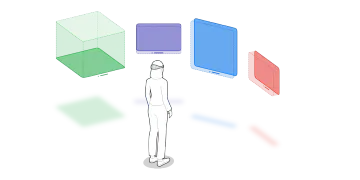

Looking at what is possible with devices now leads to fascinating potential as more interest grows in adopting this technology. It is certainly possible to use current VR devices for more productivity-oriented tasks — such as a giant virtual screen for your computer — but they have not been widely adopted yet. Ergonomics are a limiting factor here. But, I also think the approach existing hardware and operating systems have taken influenced how easy and appealing that adoption would be. Looking at the progress made in the last few years, I do not think anyone has nailed the concept of a virtual operating system.

Regarding the new visionOS release, I find the choice of the words "spatial computing" to be quite apt for that very reason. It might sound like an odd abstraction, but I see where they are going with this. In an attempt to shed perceptions of the virtual reality experiences of the past, the new vernacular points toward a different path with the potential to expand into entirely new types of experiences. Digging into the available documentation for how it will work reveals how they have established a foundation for virtual operating systems and standards that will shape future iterations and efforts.

“... an infinite spatial canvas to explore, experiment, and play, giving you the freedom to completely rethink your experience in 3D. People can interact with your app while staying connected to their surroundings, or immerse themselves completely in a world of your creation.”

- Apple

Understanding how to interact with interfaces using different input methods will help us envision new experiences. In this world of mixed reality, the mouse and keyboard are optional input devices, while your eyes and hand gestures serve as the primary way to control the experience. This approach is a notably unique aspect of the Vision Pro compared to other, existing products — which typically require physical controllers. The eye tracking and hand gesture input looks promising, but it has also proven less than ideal in certain situations, such as typing on a virtual keyboard. Regardless, I think it is worth celebrating the approach to accessibility here. Giving people more input options is always better for accessibility. Additionally, as we adapt to this new format, there will inherently be some things that are much easier and better suited for the medium, while others less so. It will take some time to figure that out, but the growing momentum and progress will undoubtedly unleash new and unique approaches to these challenges.

Past lessons that may apply to the future

As the advent of the smartphone ushered in touch input and touch gestures for our digital experiences, this new paradigm shift in mixed reality appears to bring natural human interaction much closer to the technology we use every day.

New input and interaction possibilities also bring new avenues for creating more inclusive and accessible experiences. For example, eye-tracking software for computer input is an assistive technology option for people with limited motor ability. However, these systems typically require specialized equipment and software to work.

Having this type of input capability as a default method in the system seems like a real benefit for accessible experiences. It also shifts the approach away from assistive technology as a secondary consideration or third-party-only option, to making it the new baseline for interacting with technology. With that in mind, we'll need to plan for experiences that accommodate our gaze and peripheral vision as they track across an interface, highlighting content along the way.

New constraints and new possibilities

I am also curious to see how people will approach familiar tasks in this new realm. Mixed reality experiences will have their strengths, and when designing for them we'll need to make sure we play to those strengths. I am also sure that some applications will be less suited to leap into the spatial world, at least not without some major rethinking. Applications requiring precision input and control, such as vector illustration, are likely best used with mice or tablets, but I do not think a hybrid approach that balances the best of both worlds is ruled out. Looking at the immediate future, some visionOS examples of new spatial interfaces hint at familiar experiences now transformed into the third dimension.

Thinking about the third dimension lands us on another exciting facet of adapting interfaces and how we will need to consider new spatial experiences. Again, taking some cues from the changes we adopted for mobile and touchscreen devices, responsive design with multiple screen sizes and orientations made us design experiences that could seamlessly morph across a wide gamut of breakpoints.

Taking into account how people will use spatial interfaces, we'll need to consider including new terrain like field of view, distance, depth, and viewing angles. As some aspects of designing for spatial experiences will bring interesting new challenges, I think those will also elicit equally interesting new possibilities. The complete freedom to place apps and screens anywhere you wish could be overwhelming without any guardrails. However, no longer being constrained by two-dimensional boxes and limited screen space, the idea of the infinite canvas might finally feel truly infinite.

Predicting how it will transform our experiences

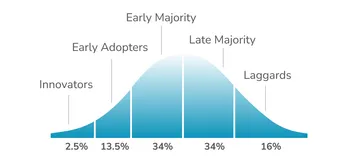

We are certainly in the early adopter days for this technology and are likely a few more years before most people use spatial computing devices. I expect some bumps along the way as we start to figure out the best use cases for spatial computing. Nonetheless, it is exciting progress to note as things continue to shift and evolve. Looking at mobile augmented reality experiences, I think we can start to get a hint of how and where things can continue to progress.

Some early mobile AR approaches may have felt a bit gimmicky, not adding much value to an experience. However, over time, I think the more common usage of mobile AR experiences we see today shows us how it has matured. It has become an invaluable tool for situations such as shopping and precise measurement and representation of building interiors ranging from real estate to construction or renovation. I am looking forward to seeing those augmented reality experiences transition to something like visionOS. For example, watching Star Wars on Tatooine or joining a video call and sharing 3D objects sounds quite amazing.

Perhaps we’ll get closer to a glimpse of a futuristic sci-fi interface sooner than we realized.